ML&PR_7: Cross Entropy, Kullback-Leibler divergence and Jensen-Shannon divergence

mlpr7

date_range 07/08/2020 23:39

1. Cross entropy

- Cross-entropy is a measure the difference between two probability of distributions and , denoted as:

- Cross-entropy calculates the number of bits required to represent or transmit an average event from one distribution compared to another distribution. In formula , is the target distribution, is the approximation of the target distribution.

- By Lagrange multiplier method, we can prove reaches the minimum value when , it means:

ML&PR_6: Entropy | 1.6.

mlpr8

date_range 19/06/2020 16:39

- In this blog, we will discuss about Information theory, about its concept and its mathematical nature.

ML&PR_5: Gaussian Distribution | 2.3.

MLPR5

date_range 14/06/2020 09:52

2.3. The Gaussian Distribution

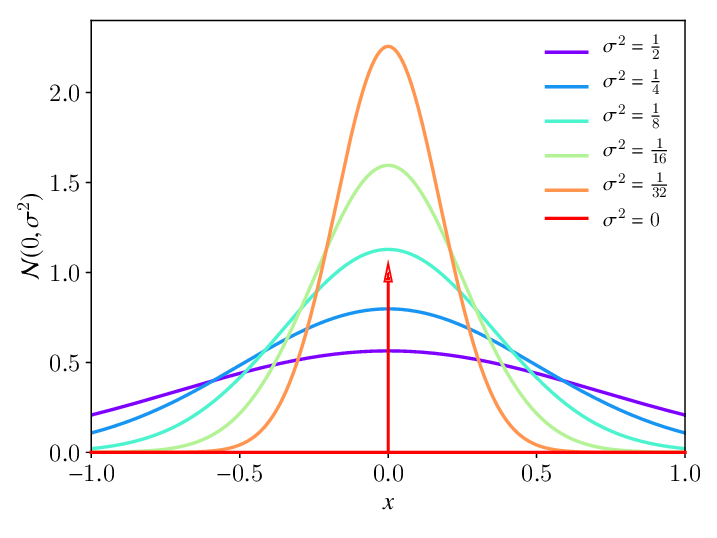

- The Gaussian, also known as the normal distribution, is a widely used model for the distribution of continuous variables. For single variable , the Gaussian distribution can be written in the form:

where are the mean and the variance respectively.

Figure 1: 1-dimensional Gaussian distribution Source: https://www.researchgate.net/figure/Gaussian-bell-function-normal-distribution-N-0-s-2-with-varying-variance-s-2-For_fig1_334535945 - For dimensional vector , the multivariate Gaussian distribution takes the form:

where the mean and the covariance matrix was mentioned in ML&PR_2.

DL_2: Singular Value Decomposition | 2.8.

DL_2

date_range 29/05/2020 08:30

- We have introduced the matrix decomposition in DL_1 which have many applications. In this article, we will discuss about using the matrix decomposition to obtain the best possible approximation of a given matrix, called a matrix of low rank.

- A low rank approximation can be considered a compression of the data represented by the given matrix.