DL_2: Singular Value Decomposition | 2.8.

-

date_range 29/05/2020 08:30 infosortDeep_Learninglabeldlalgebra

- We have introduced the matrix decomposition in DL_1 which have many applications. In this article, we will discuss about using the matrix decomposition to obtain the best possible approximation of a given matrix, called a matrix of low rank.

- A low rank approximation can be considered a compression of the data represented by the given matrix.

2.8 The Singular Value Decomposition (SVD)

We know that a matrix is not square, the eigendecomposition is not define and we must use a SVD instead.

Recall about the eigendecomposition, we analyze a matrix rely on its eigenvalues and eigenvectors:

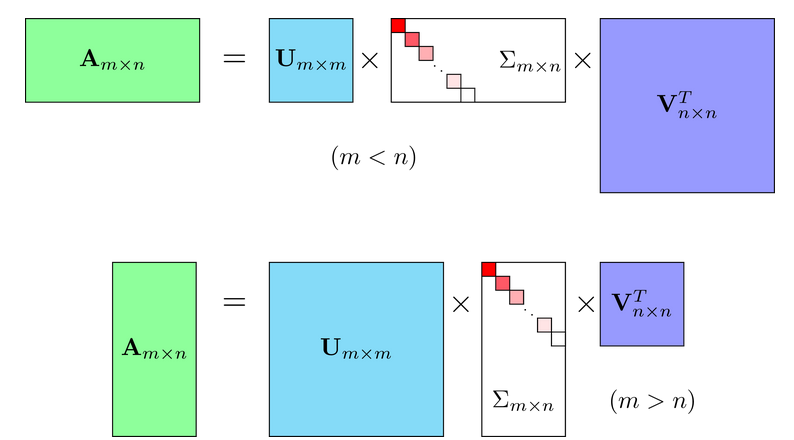

In SVD, suppose is an matrix, we have:

In there, is a diagonal matrix. The element along the diagonal of , which are are known as the singular values of and is rank of .

2.8.1. The origin of SVD

From , we have:

- We see that the element in the diagonal of are (as we denote in DL_1). Thus, are the eigenvalues of and is the eigendecomposition of .

- Because of , is a positive semidefinite matrix. The values are called singular values of .

- The columns of matrix are eigenvectors of , called left-singular vectors of .

Similarity, we can obtain:

Therefore, we can show that the columns of matrix are the eigenvectors of , called right-singular vectors of .

Both and are orthogonal matrices.

2.8.2. Compact SVD

We can rewrite to:

where each ().

We see that depends only on the first columns of , and values which are nonzero on the diagonal of . So:

where included first columns of and , included first rows of .

2.8.3. Truncated SVD

We already know that the element in the diagonal of are nonzero and decrease . In reality, some value is a big value, the remainder is small, approximately zero. Thus, we can approximate by:

where .

Theorem:

It means error of this approximate way is square root of the sum of squares of singular values that we omitted at the end of .

Prove:

We have and . Now we infer:

because are orthogonal matrices.

because of the commutative property of function.

because is a scalar.

Therefore, we obtain:

We see that the bigger the is, the smaller the lost of (compare to the origin matrix ) is. So we use Truncated SVD to approximate the origin matrix base on the amount of information we want to keep, called Low-rank approximation.

Advance

Prove the existence of SVD:

Theorem: If is a real matrix then there exist orthogonal matrices

such that

where and . Equivalently:

Proof: Let be unit vector. We consider:

- The scalar must achieve a maximum value on the set . Let be two unit vectors in and respectively where this is achieved maximum :

- The maximum value of is dot product between and then is parallel to the vector . Also, we have . Similarity, we can show that is parallel to the vector .

- can be extended into orthonormal bases for . Collect the orthonormal basis vector from into orthogonal matrices respectively, we have:

- We can show that the first column of is (considered as a exercise for you). So the first entry of is and its other entries are because is parallel to and (because is orthonormal basis). Thus, we have just explained the result of first column of . Similarity with the first row of , by . The matrix . We have:

So:

We repeat until has zero rows and zeros columns to obtain:

where and are orthogonal matrices obtained by multiplying together all the orthogonal matrices used in the procedure, and:

Equivalently:

which is the desired result.

Next, we show that . To show this, we just need to show that . We have:

where

and

We see that is a linear combination of and therefore it is unit vector and orthogonal to . Similarity, we can obtain is unit vector and . So belong to subsets of the unit spheres in respectively. As we assumed above, , therefore .

Finally, we must prove are nonnegative. We see that . Obviously, .

Reference:

- 2.7 | Deep Learning | Ian Goodfellow, Yoshua Bengio, Aaron Courville.

- Bài 26: Singular Value Decomposition

- 10. Singular Value Decomposition | Duke Computer Science